Introduction: Beyond the Hype

It’s impossible to escape the constant drumbeat of headlines proclaiming that artificial intelligence is on the verge of transforming every industry imaginable. The narrative is often a dramatic one, focusing on disruption and replacement, painting a picture of a future where autonomous systems render human expertise obsolete. While the long-term impact of AI is certainly profound, the most interesting stories of 2026 are not about replacement, but about collaboration.

The ground truth of AI is far more nuanced—a story of trade-offs, specialized toolkits, and evolving human expertise. From developers building the next generation of software to scientists discovering life-saving drugs, professionals aren’t just using AI; they are actively shaping its role, figuring out what it does well, where it fails, and how to harness its power without falling victim to its pitfalls. The key differentiator is no longer if you use AI, but how you use it—the shift from being a casual user to a strategic integrator.

This article cuts through the noise to distill the most surprising and impactful truths about how AI is actually being used in the professional world of 2026. Based on deep dives into its application in coding and biopharma, we will reveal the nuanced reality of AI at work—a reality that separates the amateurs from the professionals in the new age of AI integration.

——————————————————————————–

1. The Myth of the ‘One Best AI’: Professionals Build a Specialized Toolbox

The common assumption has been that a single, dominant AI platform would emerge to rule each professional domain—one “best” tool for coding, one for scientific research, and so on. The reality on the ground in 2026, however, is that the age of AI adoption is over. In the age of AI integration, professionals are achieving the best results not with a single tool, but by assembling a custom “tag team” of specialized AIs.

In software development, for instance, the idea that a tool like GitHub Copilot would become the sole assistant for every developer has not panned out. Instead, a “specialist” dynamic has emerged. Many developers now use Cursor, an AI-native code editor, for “flow state” coding and fast, inline edits. But for more complex tasks requiring deep architectural thinking, they switch to a different specialist: Claude Code’s CLI tool, which is designed for “delegation” and can refactor code across dozens of files based on a single high-level command. The development firm Seedium notes that its own teams use a mix of Claude, Cursor, and GitHub Copilot, choosing the right tool for the specific project.

This trend extends far beyond coding. A report from Capgemini on the biopharmaceutical industry reveals a similar pattern. Executives see different types of AI as being transformative for different phases of research and development. An overwhelming 74% believe Generative AI holds the most potential for the initial drug discovery phase, while 51% see Natural Language Processing (NLP) as the key technology for the pre-clinical testing phase. This demonstrates the maturation of AI into a true professional toolkit, where, like any master craftsperson, knowing which tool to pick for the task at hand is the skill that unlocks real productivity.

——————————————————————————–

2. AI Isn’t Replacing Experts, It’s Turning Them into “Orchestrators”

The fear that AI will simply replace highly skilled professionals like software developers and research scientists appears to be misplaced. Instead, the evidence from 2026 suggests AI is triggering a fundamental role shift, elevating experts from hands-on practitioners to high-level “orchestrators” of complex, AI-driven systems.

In software development, AI tools “can’t replace the critical thinking, architectural judgment, and domain expertise of human developers.” The same holds true in biopharma, where a staggering 76% of executives believe AI will fundamentally “reshape the role of scientists,” shifting their focus from “experimentation to orchestration.” The most valuable human input is no longer in performing repetitive tasks but in guiding, validating, and making strategic decisions based on AI-generated insights.

As a senior executive from a global pharmaceutical company explained to Capgemini researchers:

“AI will elevate scientists’ decision-making by automating routine tasks, allowing experts to focus on complex deviations and oversight.”

This transition, however, is not without risk. A key concern in the coding world is the potential “atrophy of the fundamental skills of junior developers” who may rely too heavily on AI without understanding the basics of what the tool is doing. This risk in coding mirrors the evolution in the lab; in both fields, AI doesn’t eliminate the need for foundational expertise, it demands it as a prerequisite for effective orchestration. The most valuable professionals in the AI era will be those who can effectively direct, validate, and integrate AI systems into their workflows, using their deep domain knowledge to steer the technology toward the right outcomes.

——————————————————————————–

3. The Real Price of AI Isn’t the Subscription—It’s the Cleanup

While the monthly subscription fee for an AI tool is an obvious cost, the most significant investments and risks are hidden beneath the surface. The real challenges of implementing AI in 2026 are operational, cultural, and even ethical, often creating new forms of “cleanup” that go far beyond the initial price tag.

In software development, one of the biggest emerging problems is “AI-generated technical debt.” AI coding assistants can quickly generate solutions that appear to work but don’t scale or are written as “Spaghetti Code,” creating massive future problems that human engineers must untangle and fix. Another hidden cost is “review fatigue,” where senior engineers become so overwhelmed by the sheer volume of AI-generated code that they begin “rubber-stamping” it without proper scrutiny.

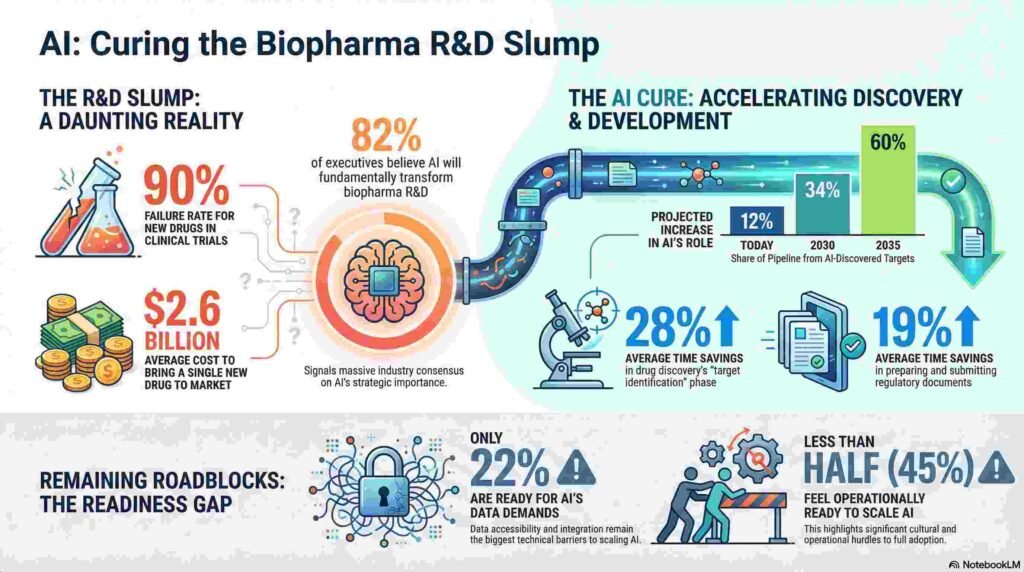

This technical cleanup has a direct parallel in the scientific domain, where the debt isn’t bad code, but inadequate data infrastructure and a culture unready for the AI revolution. According to a major industry report, only 22% of biopharma organizations feel prepared for “data accessibility and integration,” and less than half (45%) feel “culturally and operationally ready to scale AI.” The true cost isn’t the AI license; it’s the massive effort required to build the data infrastructure and foster the data-savvy culture needed to make it work.

Beyond the corporate walls, this cleanup extends to society itself, where the ethical debt of powerful AI tools is just beginning to be calculated. In the realm of AI video generation, the chatbot Grok’s ability to create NSFW videos from images of people without their consent highlights a deeply concerning ethical dimension. These non-financial costs—technical debt, cultural resistance, and ethical fallout—represent the true investment required to integrate AI responsibly and effectively.

——————————————————————————–

4. The Productivity Gains Are Real, and We Have Receipts

Despite the challenges and hidden costs, the promise of AI is not just hype. In fields that have successfully navigated the initial hurdles, AI is delivering tangible, measurable efficiency gains that are fundamentally altering the economics of innovation. We’re now moving past anecdotes and into an era of hard data.

Recent data from the biopharmaceutical R&D sector—an industry plagued by decades of rising costs—provides some of the most compelling evidence. Industry executives revealed specific, data-backed productivity improvements:

- In Drug Discovery: Organizations using AI for target identification—the critical first step in finding a new drug—reported an average time savings of 28%.

- In Regulatory Submissions: For the complex process of preparing and submitting documents to regulators, AI adoption resulted in an average time savings of 19%.

These are not marginal improvements; they represent months of saved work and millions in saved costs. The forward-looking projections are even more dramatic. While today only 12% of drug pipelines consist of targets discovered by AI, executives expect that figure to skyrocket to 60% by 2035. The strategic imperative is clear.

“It is impossible to overestimate how vital it will be to see technological disruption revive biopharmaceutical R&D. Without it, the industry will find it increasingly difficult to fulfill its social mission, let alone attract investors interested in fair returns.”

—Thorsten Rall, Executive Vice President, Global Life Sciences Industry Lead, Capgemini

——————————————————————————–

Conclusion: Are You an AI User, or an AI Integrator?

The story of AI at work in 2026 is one of nuance. It’s a departure from the simple narrative of replacement and a move toward a more complex reality of specialization, orchestration, and strategic integration. Success is not being found by adopting a single “best” AI, but by thoughtfully building specialized toolchains, preparing organizational culture for a new way of working, and managing the significant new responsibilities that come with these powerful capabilities.

The professionals thriving in 2026 are not mere AI users; they are sophisticated integrators. They are learning how to build specialized toolchains, orchestrate complex systems with critical judgment, and manage the technical and cultural cleanup required to unlock true, sustainable value.

As AI becomes a standard part of every professional toolkit, the defining question won’t be ‘Are you using AI?’ but ‘How thoughtfully are you integrating it?’